Author: Nurettin Mert AYDIN, Group Manager

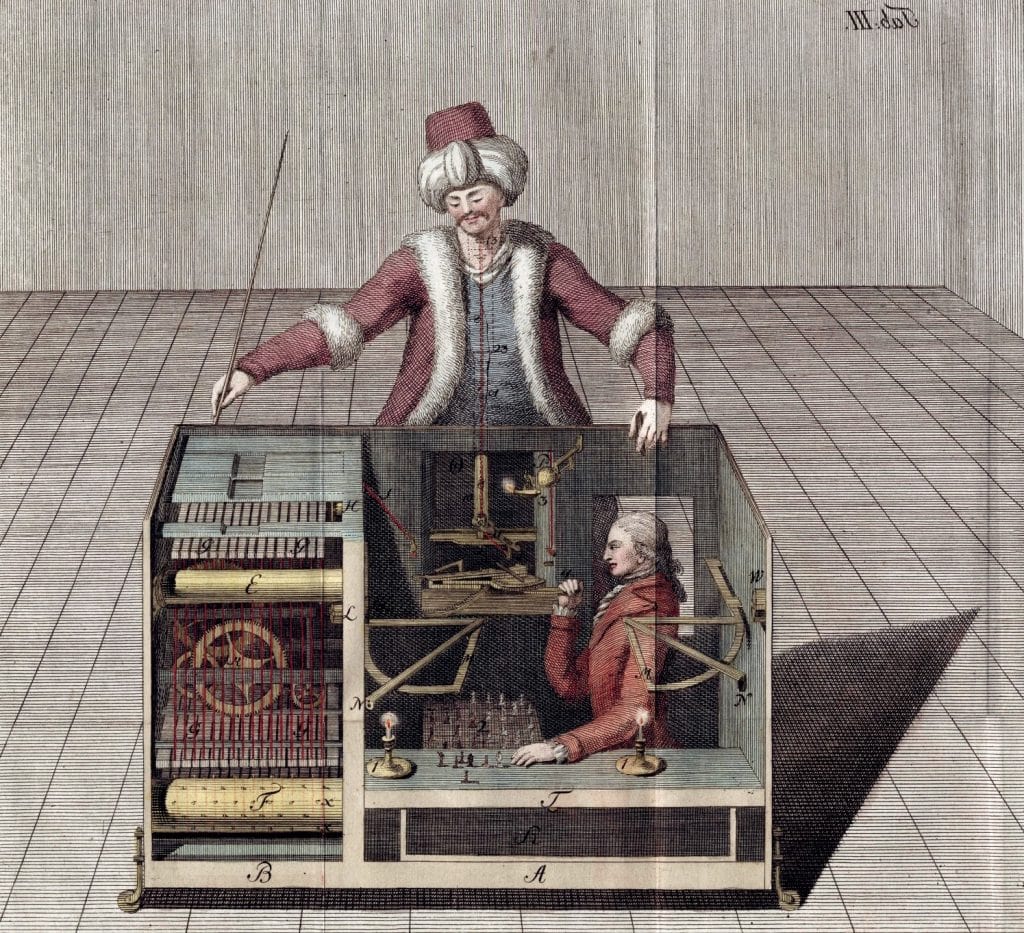

Back in 1770s, when Wolfgang von Kempelen built the Mechanical Turk, he was too confident that it would beat the pit out of Maria Theresa in a chess match. Yet he did not take into account that the sweet talk brought by the hidden human inside the machine, who later got caught due to several errors, would be more appealing than the device itself. Is it really the talk, that sweet talk we perform to explain and excuse our errors as humans, that renders machines and programs into somehow inferior beings that lack “something”? Well, this is an almost gibberish article involving the Mechanical Turk and the Turing test.

The Mechanical Turk was a one-of-a-kind wooden device, featuring a mannequin with mechanical and moving parts who played chess. There was a small room inside this giant unit that actually allowed a human being to hide and play the chess game, deciding and making the moves. The mannequin was simply being controlled by that hidden human.

Some say that the Mechanical Turk was built to fool people. But the truth is, it was purposefully made-to-order. That incident is still associated with the origins of artificial intelligence and it is an effort to visualize beating the human mind by something produced by the human: And all errors and glitches are included as bonus!

Almost one hundred years after the Mechanical Turk in 1850, when Alan Turing came up with the idea of a test (“The Turing Test”) to inquire about the human-similarity of a machine in demonstrating intelligent behavior, he could not run away from criticisms involving well-taught parrots passing this test quite easily and nicely. The test was carried out by a human evaluator, who tried to decide whether the entity behind the curtain was a real human or not, by evaluating the answers provided to certain questions. Would the questioned entity lead to any errors?

Right after this wonderful idea about a test, Turing boldly asked the infamous question:

“Can machines think?”

And he immediately changed his question to:

“Can machines do what we can do?”

This is somewhat mindful and powerful when we go back to the times when the Mechanical Turk was built, or the requirements were being gathered: Would the Mechanical Turk do what a human could do? Yes. It would play chess and it would make errors. Could it carry on these activities on its own? No.

Today we are proud of the systems, software and machines we build. A vast majority of us know that there might be flaws, glitches and room for improvement, but we are not spending a lot of time and thought about the line between the man and the machine anymore. It is more convenient to seek machine “with” man or rather machine “for” man. There is another key factor, though, a fine line that needs more attention.

That key factor seems to be nothing but the self-decisions or autonomous behavior. Today we see autonomous vehicles driving on their own, drones depicting various behavior upon the sensor data they collect, software deciding whether to favor credit grants to bank customers or choosing the best embryo to transplant. By the way, autonomousity has a lot to do with the capability to make errors as well.

Nevertheless, what the Mechanical Turk did was simply a series of collections and inspections around a turn-based approach and in that sense Mechanical Turk was similar to Turing’s test as well. Today, we are not ruling out this approach. We are rather decorating it to focus on autonomous behavior. Machines, through software, collect data, process data, a galaxy-load of them, and act upon the inference to “do” something. It is still an interesting question, though:

“Can machines do what we can do?”

And what we do, in an artistic way, is to err. Our errors define who we are, whether we will succeed or fail in the next round, and whether we are going to learn from the whole process. And it is apparent that systems today can do enhance themselves in the same manner. They even face or get involved with more errors than humans. It is just a minute detail: They are not aware that those things are classified as “errors”.

And last but not the least… You might want to check the following books for more in-depth information:

[1] Stantage, Tom. “The Mechanical Turk: The True Story of the Chess-playing Machine That Fooled the World”

[2] Epstein, Roberts, Beber. “Parsing the Turing Test: Philosophical and Methodological Issues in the Quest for the Thinking Computer”